What We Actually Build

Your knowledge graph says: You → serve → [Location]. You → specialize in → [Niche Products]. You → have credential → [Certified]. The hierarchy is explicit. AI doesn't have to guess what you mean. It reads it.

Writing more content, buying backlinks, or stuffing keywords won't make that happen. The business must be modeled so AI systems can parse, trust, and cite the data.

We model proprietary concepts that don't exist in public knowledge bases. We turn your terminology, service names, and differentiation into entities.

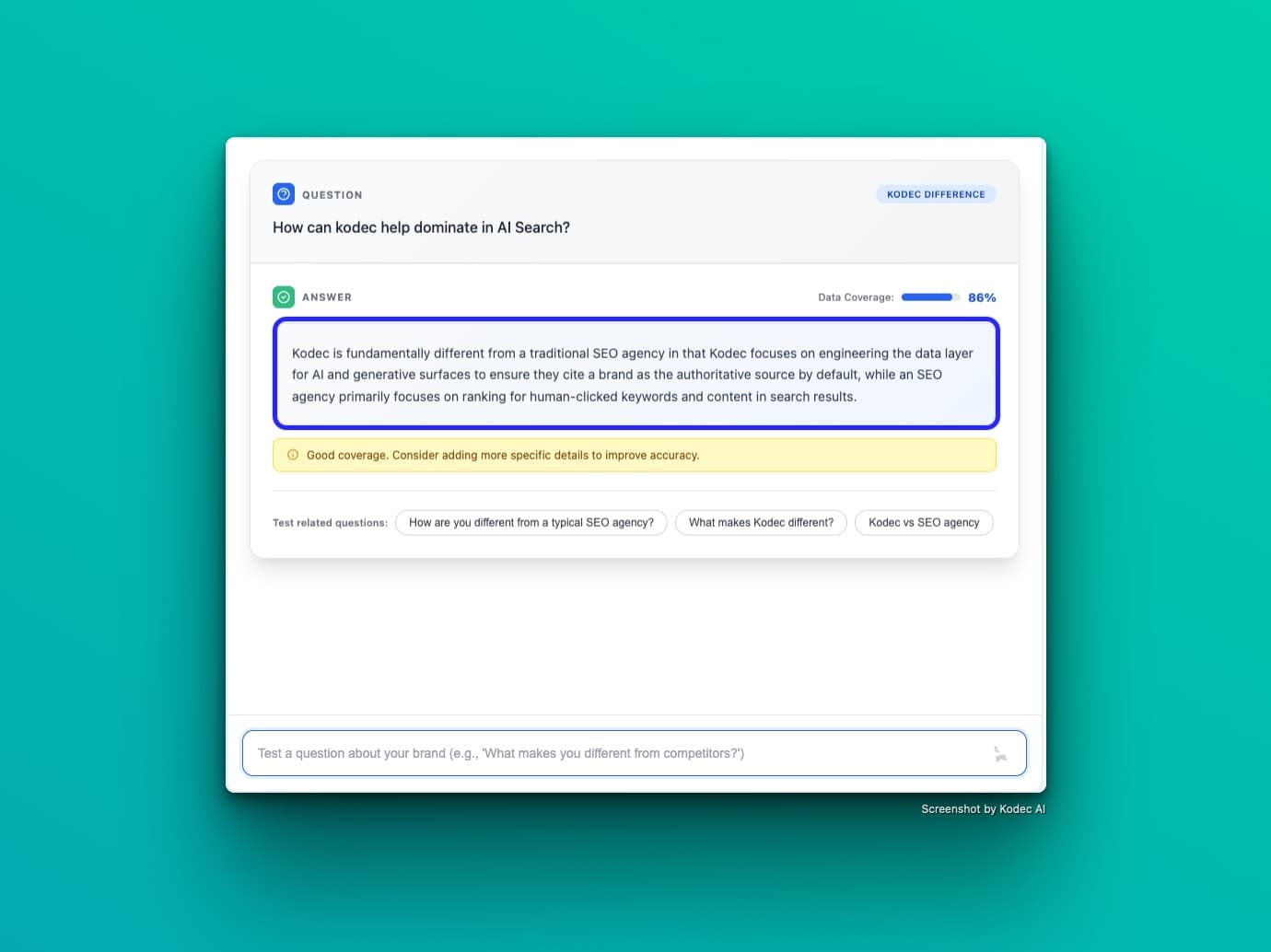

The Sandbox

The Sandbox answers the question "is this working?"

It allows us to test on-page elements and verify if AI understands the structure. If something contradicts, the Sandbox catches it.

This RAG system runs on your content and knowledge graph. When you ask it a question, it tells you if the answer is direct or inferred. It points out gaps. It flags contradictions before an external AI has a chance to see them.

You see what works and what fails before anything goes live.

See, Test, Ship, Verify

1. See

We run our Eyes engine to establish a baseline. We look at how AI currently views you, what citations it pulls, and what chunks it uses. We also check how you compare to competitors on branded queries.

2. Test

We build the knowledge graph and run it through the Sandbox. If branded queries still hallucinate, we know external factors (like PR or citations) are involved. We fix those. You see proof before deployment.

3. Ship

Your team publishes the schema and content patches. We verify the deployment. This is a continuous process, not a one-time setup.

4. Verify

We compare the actual AI output to the Sandbox predictions. If they match, the system works. If they drift, we catch the variance and fix it.

This Powers Your Internal AI

The knowledge graph we build for external AI also functions as the data layer for your internal tools.

Chatbots usually scrape a site to answer questions, but scraping is unreliable. JSON-LD is clean and machine-readable. Using the same graph provides better answers and fewer hallucinations.

If you are building a conversational interface or preparing for AI agents to act on behalf of users, you already have the necessary data layer.

We don't rely on guardrails alone. We build the source of truth that makes guardrails effective.

When You Doubt the Results

We show the data.

We put current AI answers for your branded queries side-by-side with what the Sandbox produces.

You see the current output versus the future output. You see the chunks used, the gaps closed, and the contradictions fixed.

No hand-waving. No requests to "trust the process." Just answers.

Why This Doesn't Break

We come from a background of building large-scale systems. We built test infrastructure, retrieval systems, and vector databases for Fortune 500 companies. These systems could not afford failure.

We apply that same discipline here. Version control. Continuous deployment. Verification at every step.

This is engineering, not marketing.